Lamé G, Simmons RK. From behavioural simulation to computer models: how simulation can be used to improve healthcare management and policy. BMJ Simulation and Technology Enhanced Learning. Published Online First: 20 October 2018. doi:10.1136/bmjstel-2018-00037

From behavioural simulation to computer models: how simulation can be used to improve healthcare management and policy

Why it matters

Healthcare systems are complex, and made up of huge numbers of diverse people and resources. This can make them challenging to understand and even more challenging to improve. For healthcare managers and policy makers, it’s often difficult to know how one decision might impact all the system’s interdependent parts.

Faced with similar challenges, managers in other sectors have turned to simulation to see how individuals and organisations react in certain situations. Simulations replicate real-world scenarios in controlled environments and provide a risk-free way to test out system changes.

Simulation can be conducted in vivo (with live participants), in silico (using a computer program), or a combination of the two where human participants in interact with computer simulations. It can be used to observe situations that would otherwise be impossible, and to train staff in policies and procedures.

Despite this potential, simulation hasn’t been widely used in healthcare management. So this paper provides an overview of how simulation can be used to improve management and policy making in healthcare.

What we found

- Simulation can be a safe, quick, and more affordable way to experiment with system changes.

- It can help managers, policy-makers and clinicians to understand how systems work and how they can be redesigned or improved to better serve the needs of patients.

- Simulation-based studies require expertise and investment to run, and their external validity may be reduced because they are artificial.

- Healthcare managers, policy makers, and improvement researchers can learn from other disciplines where simulation has been used effectively.

At a glance

Abstract

Simulation is a technique that evokes or replicates substantial aspects of the real world, in order to experiment with a simplified imitation of an operations system, for the purpose of better understanding and/or improving that system. Simulation provides a safe environment for investigating individual and organisational behaviour and a risk-free testbed for new policies and procedures. Therefore, it can complement or replace direct field observations and trial-and-error approaches, which can be time consuming, costly and difficult to carry out. However, simulation has low adoption as a research and improvement tool in healthcare management and policy-making. The literature on simulation in these fields is dispersed across different disciplinary traditions and typically focuses on a single simulation method. In this article, we examine how simulation can be used to investigate, understand and improve management and policy-making in healthcare organisations. We develop the rationale for using simulation and provide an integrative overview of existing approaches, using examples of in vivo behavioural simulations involving live participants, pure in silico computer simulations and intermediate approaches (virtual simulation) where human participants interact with computer simulations of health organisations. We also discuss the combination of these approaches to organisational simulation and the evaluation of simulation-based interventions.

Introduction

Healthcare systems have long been described as complex systems.1 2 They involve a large number of diverse participants (physicians, patients, nurses, pharmacists, etc) and resources (medical equipment, IT infrastructure, facilities, etc), the actions of which are strongly interdependent.

In such complex systems, managers and policy-makers, that is, individuals who make decisions that will affect a larger number of people (a category that includes board members and executives, as well as administrative, clinical and nursing leaders), may struggle to grasp the complex relationships between multiple factors in the systems they have to manage, and therefore to assess the impact of certain choices. By testing their potential decisions in a simulated environment, managers and policy-makers can safely explore different options and evaluate their impact, and researchers can learn about managerial decision-making behaviour. Simulation can also be used for training managers; they can practise their role in simulated scenarios where they can safely experiment, learn about what affects the behaviour of the system they manage and then transfer this knowledge to real-life management.3–5 The situations and the decisions involved will differ depending on the role of the participants. A frontline clinical manager may want to know if it is more effective to hire an additional nurse or an additional consultant to reduce waiting times, whereas a national policy-maker may need to understand the dynamics of clinical employment to assess whether there is a need to train more doctors and nurses. Situations differ, but simulation offers a panel of methods that can adapt to different levels and types of decision-making scenarios.

In healthcare, the best-known simulation approach is clinical simulation, where clinicians reproduce clinical encounters, often for training purposes. These approaches are based on frontline staff roles and perceptions of healthcare systems. For instance, clinicians may reproduce the conditions of a specific operation so that an individual or a team can practise. However, when placed in the position of managing an organisation, individuals have a more aggregated view, they look at flows and groups rather than individual patients, and they design and implement organisational processes rather than individual treatment plans. The decisions made by managers and policy-makers may span years or even decades. This should be reflected in the simulations used to study them. Management is also characterised by a relative lack of testable technical or procedural skills. Although evidence-based management is a trending topic, there are few prescriptions for managerial behaviour which could be evaluated.6 Consequently, simulation approaches for healthcare management and policy-making have distinct requirements from those used to study and improve clinical practice.7

The literature on simulation for healthcare management and policy-making is dispersed across different disciplinary traditions (health economics, operational research, management, health services research, clinical journals) and typically focuses on a single simulation method. Simulation continues to make little impact on healthcare management and policy-making.5 8 In this article, we examine how simulation can be used to investigate, understand and improve management and policy-making in healthcare organisations and provide an integrative overview of existing approaches.

Simulation approaches for researching and improving healthcare management and policy-making

There is no readily available definition of simulation for healthcare management and policy-making that encompasses the whole range of simulation methods. In a clinical context, Gaba defines clinical simulation as ‘a technique–not a technology–to replace or amplify real experiences with guided experiences that evoke or replicate substantial aspects of the real world in a fully interactive manner’.9 In operational research, Robinson defines computer simulation as ‘experimentation with a simplified imitation (on a computer) of an operations system as it progresses through time, for the purpose of better understanding and/or improving that system’.10 From these two definitions, we define simulation for healthcare management and policy-making as ‘a technique that evokes or replicates substantial aspects of the real world, in order to experiment with a simplified imitation of an operations system, for the purpose of better understanding and/or improving that system.’ This definition covers a wide range of practices and projects in healthcare management and policy-making.9 11–15

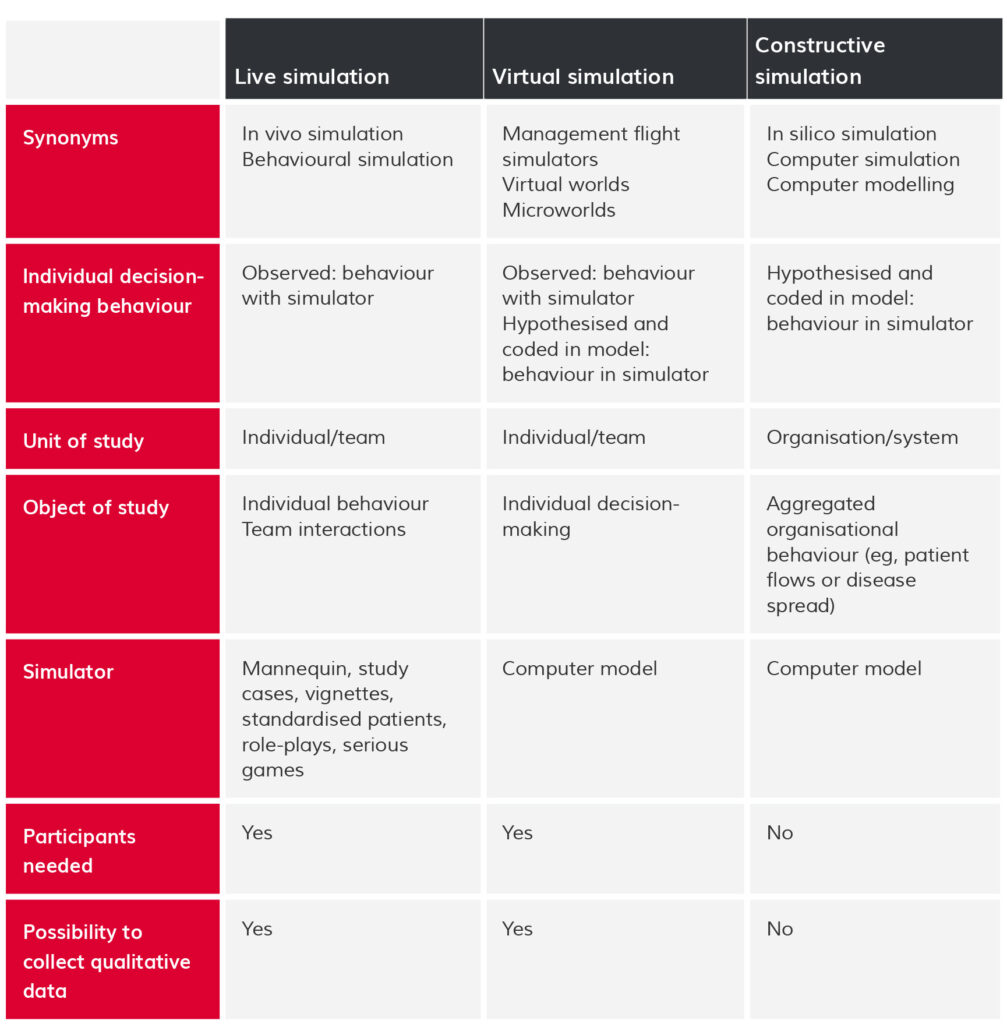

In the defence and military sector, simulation applications are categorised using the live, virtual and constructive typology11:

- Live simulations involve real people operating real systems. They have also been referred to as behavioural simulations, clinical simulations or in vivo simulations.

- Constructive models or simulations involve simulated people operating simulated systems. They are sometimes called in silico simulations or computer simulations.

- Virtual simulations sit in-between the two previous approaches. They involve real people operating simulated systems (eg, flight simulators) and have sometimes been labelled management flight simulators or microworlds.

We follow this categorisation to structure our presentation of the different options for simulating healthcare management and policy-making. We now detail these three approaches to simulation.

Live simulation

Live simulation is often used for medical education16 and has also been proposed for improvement17 and research purposes18 in health services. However, it is mostly used at the clinical level, and the use of live simulation to inform healthcare management and policy-making remains limited.5

The objective in live simulation is to study individual and team behaviour by asking participants to respond to a set of circumstances. For instance, a new policy or a new management standard is introduced in the simulation and the way participants adapt their behaviour is observed.5 Such simulations might involve patient mannequins,19 20 board games representing the functioning of healthcare organisations,7 role-plays, large-scale drills, for example, mass casualty incidents (MCIs) drills,21 or case studies and vignettes (ie, narratives of real situations in healthcare organisations, to which managers are asked to react).

When live simulation is used for training, the objective is for participating managers to realise how their decisions and attitudes affect the performance of the simulated healthcare system. In other improvement projects, the aim is to better understand the decision-making processes and the interactions between participating mangers and to characterise their behaviour under different conditions. In a project on safety leadership, Singer et al and Cooper et al designed a training programme to improve safety leadership among clinical and non-clinical hospital managers.19 20 The main element of this 1-day training was a simulation exercise, using a mannequin, where non-clinical managers participated in a simulated operation. The scenarios were designed to offer participants the opportunity to demonstrate important safety leadership skills: showing you ‘really care’, being welcoming/non-defensive, encouraging speaking up, facilitating communication and teamwork, taking action, mobilising information from across the organisation and seeking input from others.19 Evaluation was performed through debriefing sessions, questionnaires on how participants enjoyed the training and a follow-up debrief 3–7 months after the training. In the follow-up, participants mentioned changes in their own and others’ behaviours, which they attributed to the training. They pointed to specific changes, such as introducing weekly action lists pinned on the information boards in their units to improve ‘action-taking’ or organising brainstorming sessions to ‘seek input’ from staff on improvement projects. In addition to the learning generated for participants, the programme yielded interesting observations on the barriers and inhibitors to speaking up encountered by managers. In another programme, Rosen et al7 created a board game where participants were put in the position of a newly appointed Chief Executive Officer in a hospital with a failing safety record. Participants started with limited information on the hospital, and a predefined organisational structure (hierarchies, goal-setting and reporting structure). They had the opportunity to acquire information from different sources (frontline improvement team, external management consultant, ‘expert’ external process improvement team, executive walk-round) to inform changes in the organisation. The debrief sessions yielded insights on managers’ reasoning. For instance, when discussing the possibility of holding people accountable for reaching safety improvement objectives through rewards or penalties, participants objected that the data used to measure these objectives were of poor quality and that the objectives were sometimes unachievable. Evaluation questionnaires showed that participants enjoyed the simulation and that it enabled discussions about new strategies for improving patient safety. However, there was no follow-up evaluation, a common issue with live simulations in healthcare management and policy-making,5 which makes it difficult to assess the impact of the training on participants’ attitudes and performance in their professional role.

For trainees, this type of simulation provides a way to experiment with decision-making in a safe environment, without consequences on the real system, and to obtain feedback from other participants and from facilitators on their behaviour and performance. For researchers, the strength of this approach compared with direct field observation is that the organiser of the simulation has control over the environment and the scenario, which gives high internal validity to this kind of study.22 It is also easy to record the full simulation for in-depth analysis.22 However, the practical organisation of live simulations can be challenging, especially if high-fidelity patient mannequins are needed (as in the above example). Live simulation can be costly, it often requires dedicated space and expertise and recruitment can be difficult when training is not the prime objective.23 24 Guidelines for using in vivo simulation for healthcare management and policy have been proposed by Cohen et al.5 These outline the design of simulation approaches, how to analyse the data and the impact on participants.

Constructive simulation

Constructive simulations use computer models that reproduce a full system in silico, for example, a ward, a hospital, a local healthcare service or a national healthcare system. Certain rules governing the system are coded in the model, such as the allocation of resources, priority rules in waiting queues or the preferences of individual agents (patients, staff) in certain situations. These rules will be coded into the computer simulation, which will then be run to see how the simulated system behaves.

The main advantage of these models is that they allow the reproduction of large-scale systems and long timescales. They allow assessment of the impact of various policies and resource configurations on the performance of these systems through ‘what-if’ scenarios. The models can also determine levels of uncertainty in large systems and foster dialogue and learning between stakeholders.8 As such, computer modelling could be very useful for healthcare policy-making and management. An important challenge common to all computer modelling approaches is the availability of data,25 an area where modellers may need to be resourceful to gather appropriate information.26 In practice, modellers have also found it challenging to engage healthcare professionals in simulation projects aimed at performance improvement.27–29 This is linked to the high workload in health services, low awareness of computer simulation methods and the ‘messiness’ of healthcare systems compared with other industrial systems, which makes them more difficult to model and hinders the implementation of findings.27 29 Participative, facilitated modelling approaches have been proposed as one way to overcome these problems.30 In these approaches, expert modellers directly collaborate with a team of clients and stakeholders in workshops to build and analyse a computer model, as opposed to the traditional approach where experts build and analyse the simulation independently and only present results to the clients and stakeholders of the project. However, in most peer-reviewed academic publications, the simulation expert is a researcher, the project is funded by a research grant rather than by the healthcare organisation,12 and the project involves limited engagement with practitioners, in stark contrast with other industrial sectors.31 32 There is evidence of simulation projects being carried by commercial consultancies or directly by healthcare organisations, but these are rarely published in academic journals.33

The lack of research on simulating healthcare delivery systems is lamented in the health services research community,34 but it is possible that this community is unaware that a plethora of papers on computer simulation has been published in the operational research and management science communities.35 Health economists have also used simulation for economic evaluations of new technologies.36 Discussions have remained entrenched in separate research communities and have not yet made a significant impact in the health services research field or in the practice of healthcare management and policy-making.

Various types of computer models can be used for constructive simulation in healthcare, each taking a different perspective on complex systems.

Discrete event simulation

In discrete event simulation (DES), various types of entities (patients, drug prescriptions, ambulances, etc) flow through a system depending on their characteristics, passing from one stage of a healthcare process to the next. Each process stage may require the use of shared resources (staff, MRIs, operating rooms, etc) and has a variable completion time. Most variables (eg, the time required to perform a surgery or the probability that a patient requires a scanner) are described as probability distributions. Each time an entity (eg, a patient) arrives in a new process stage, the system will randomly pick a completion time in the distribution. When many simulations are run, the distribution of values will come close to the probability distribution.

Using DES, it is possible to simulate the impact of different patient schedules, resource allocation or staffing policies on patient flows (waiting times, length of stay, etc).37 For instance, Tako et al38 examined waiting times in a UK obesity care service. Working directly with practitioners to frame the problem and model it, they built a DES model of the obesity clinic and simulated various scenarios, such as modifying the allocation of the workforce to different activities or adding different types of resources. Although adding resources had a positive impact on waiting times in certain parts of the system, the authors showed that this may only shift the issue to other parts of the system and may even worsen the outcomes on some indicators. These results highlight important trade-offs in the management of healthcare systems. The study resulted in a decision to add more surgeons, rather than more physicians and to revise eligibility criteria for bariatric surgery. Other examples of using DES include the prospective evaluation of new models of primary care delivery in terms of costs and service quality39 and identifying the best ordering policies to improve the blood supply chain inside hospitals.40

System dynamics

The second computer simulation approach is system dynamics (SD). It is underpinned by the assumption that ‘the complex behaviours of organisational and social systems are the result of ongoing accumulations—of people, material or financial assets, information, or even biological or psychological states—and both balancing and reinforcing feedback mechanisms.’41 Therefore, SD does not explicitly model individual agents but ‘stocks’ and ‘flows’ of patients or other agents (eg, staff), and works at a more aggregated level than DES to show how organisational and social structures influence the behaviour of systems.42 Structure in an SD model refers to the causal chains that underlie events, represented in the model as a network of interrelated causal loops between variables. SD can also include so-called ‘soft’ variables43 representing constructs whose quantitative measurement is controversial, such as ‘patient satisfaction’, and which are not easy to include in DES. SD is often preferred over DES for larger systems and longer timescales.5

An example of SD application is a series of papers on the impact of Chlamydia trachomatis, a common sexually transmitted infection.44 45 In this study, researchers collaborated with a hospital department in Portsmouth, UK, to inform a decision on the deployment of a new screening intervention. The simulation study complemented a screening trial in Portsmouth. Based on insights gathered through statistical analysis of empirical public health data, an SD model was used to evaluate the impact of the disease (prevalence, cost), its dynamics (roles of different groups in the diffusion of the infection) and how it is affected by different interventions (screening, targeted screening). This research was used to inform the local organisation of care in genitourinary medicine through a better targeting of screening interventions. Other examples include the prospective evaluation of the need for dental care in Sri Lanka to adjust dental training capacities26 and a study of the dynamics of drug supply chains to avoid shortages of essential medications.46

Agent-based simulation

Agent-based simulation (ABS) models examine interactions between heterogeneous autonomous agents who obey a set of rules. At each moment in the simulation, each agent (eg, an individual patient, doctor or nurse) applies the rules based on its local situation to determine its next state. For instance, if an agent representing a patient lives in an environment where a certain disease is prevalent, it may switch states from ‘susceptible’ to ‘infected’. The change of state may be influenced by certain characteristics of the agent, such as sex or age. When switching states, the agent in turn modifies the local context for its neighbours. In the case of an epidemic, the agent may then contaminate the other agents in its household.

In this way, ABS is directly related to the concept of emergence, where the behaviour of a system stems from the aggregated effect of individual behaviours at a lower level. This is the core of the ‘complex adaptive system’ perspective, in which organisations are understood as ‘collection(s) of individual agents with freedom to act in ways that are not always totally predictable, and whose actions are interconnected so that one agent’s action changes the context for other agents’.1 This is different from more mechanistic perspectives where individuals are bound to follow established procedures and rules. The ABS approach makes it possible to model the impact of individual behaviour at a system level and to account for variety among agents. ABS has been adopted in various social sciences disciplines, including sociology47 and management research.48 Methodological introductions are available in the literature.47 49

ABS was developed more recently than DES or SD for modelling organisations and it is not yet well diffused in healthcare as a simulation approach.50 However, some examples are starting to appear. For instance, Barnes et al51 simulated the impact of various policies and practices, such as hand hygiene compliance and efficacy, patient screening, decolonisation, isolation and staffing on the spread of methicillin-resistant Staphylococcus aureus (MRSA). They identified the impact of staff-to-patient ratios on transmission rates and found that non-compliance to hand hygiene rules by rogue physicians constituted a significant transmission factor. Although this result has clear managerial consequences, the project was a research initiative and no engagement with managers or policy-makers was mentioned in the article. On a related topic, Macal et al52 used ABS to simulate community-associated MRSA in the Chicago area. Their findings show that colonised agents (ie, people who carry the bacteria but are asymptomatic) play a greater role in transmission than infected people, thus challenging the current paradigm for tackling MRSA infection. Again, the authors do not mention engaging directly with stakeholders in this study, although the results could have informed local public health policies.

Virtual simulation

While live simulations involve real participants operating ‘physical’ systems in vivo, and constructive simulations use fully in silico models to simulate organisations, virtual simulations represent an intermediate mode. They involve human participants interacting with computer models of complex organisations, in a type of ‘human-in-the-loop’ computer simulation where participants periodically provide inputs to the computer model, thus influencing the course of the simulation. The advantage compared with in vivo simulation is that computer models can rapidly compute a large amount of data and simulate a long timescale (from days to years) in a few seconds. Compared with constructive simulation, virtual simulation allows researchers to study real-time decision-making by asking for input from participants. The decisions made by these human participants are closer to real decision patterns than the rules coded in the computer models of pure constructive simulations. However, virtual simulations can be difficult to implement. Among other factors, careful attention must be paid to the clarity of the goals of the project (learning objectives for participants, research objectives for observers), to the computer models used (level of complexity, capacity to illustrate clearly and convincingly the phenomena of interest), and to the way the simulation is delivered (briefing, debriefing, computer interface).53 54

For research, this method is used to surface mental models, elicit decision-making processes, identify biases and test theories on decision-making.55 We are not aware of the use of this type of simulation in healthcare management and policy-making. In climate change policy, a study using a virtual simulation showed that a large proportion of participants did not properly understand how variations in carbon emissions relate to the atmospheric concentration of carbon dioxide.56 This is attributed to a fundamental misunderstanding of the accumulation phenomenon that partly drives the behaviour of such systems. Other studies have shown that when faced with decisions in complex economic systems, people often apply simple decision patterns that fail to take into account ‘feedback’ phenomena (where the output of a process also affects its input, as for instance in ‘vicious’ or ‘virtuous’ cycles).57

When used for training, the objective is to develop mangers’ understanding of the factors and phenomena affecting system performance. For instance, Pennock et al58 developed a policy flight simulator on the transitional care model, where advanced practice nurses lead the transition of older adults with multiple chronic conditions from a hospitalisation to home. The authors built a computer simulation that shows how this model affects the income of a hospital, depending on factors such as payment system parameters, patient eligibility criteria or bed replacement rates and the uncertainty on these. They also built a system-level model of how the adoption of the transitional care model varies across an entire healthcare system, depending on the cost and efficacy of, and providers’ beliefs about, this model, and systems characteristics. A qualitative evaluation by senior healthcare executives showed high interest and multiple potential uses of such tools, for instance, for ‘what-if’ analysis for one provider or for negotiations around funding with insurers.

Another example of virtual simulation in healthcare management is Bean et al’s hospital simulator, where participants can distribute the hospital’s resources between different wards and establish policies for waiting queues (eg, ‘first come first served’ vs ‘high priority patients first’). The computer model then simulates the impact of these decision on the hospital’s performance.59Beyond their use as decision-support tools, these virtual simulations could be used for training, or for analysing decision-making patterns of healthcare executives. In other sectors, virtual simulations have been used to study decision-making in the exploitation of natural resources,60 or on the effect of incentives on the development of alternative fuels in automobile markets.61

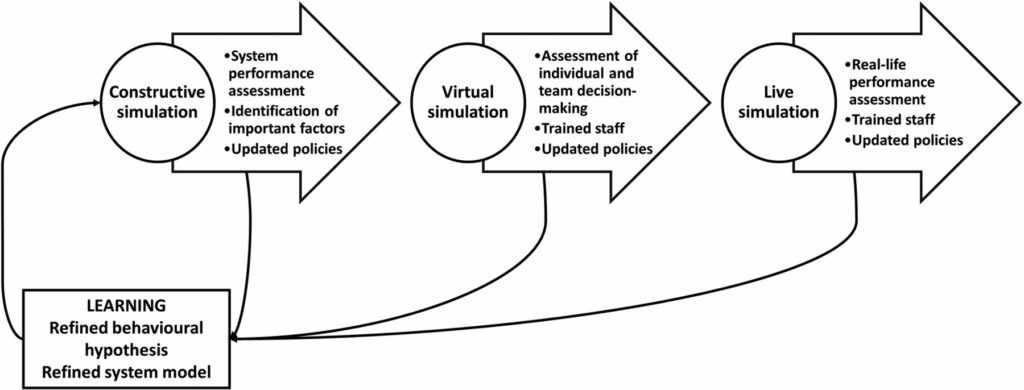

Combining simulation approaches to improve healthcare management and policy-making

We have presented and discussed three different modes of simulation used to study and improve healthcare management and policy-making. Table 1 summarises their characteristics. Each of these three modes has strengths and limitations. However, used in combination, they could be complementary. The integrated use of live, virtual and constructive simulations is an important objective in military training.62

Table 1

Characteristics of the three modes of simulation

To illustrate how this approach could be useful in healthcare management and policy-making, one can consider the issue of preparing for Mass Casualties Incidents (MCIs) due to environmental disasters, terrorist attacks or industrial hazards. Live simulations are already used to assess organisation’s preparedness for such events.21 However, full-scale drills are long and expensive to organise. One could start by building a constructive simulation of a MCI and test different policies (eg, resource allocation) to evaluate the global performance of the healthcare system. Such models already exist.63 Based on this model, one could then train different types of decision-makers through virtual simulation. Some virtual simulations already exist for MCIs, currently focusing primarily on clinical skills,64 but the approach could be used at managerial level to refine understanding of decision-making in these exceptional conditions and to train participants. Finally, based on the results of constructive simulations and virtual simulations, a scenario for a full-scale live drill could be elaborated. This live drill, which is likely to be the most expensive part of the process, would build on the knowledge accumulated through the other approaches and would act as a reality check to test the models and the hypotheses they make about the real situation. A number of other approaches could be conceived, for instance, small-scale drills for parts of the system (eg, specific types of surgeries associated with MCIs).

As illustrated in figure 1, the process of combining simulation approaches is cyclical, with the understanding of the system’s behaviour and performance refined at each step and policies updated accordingly. This example illustrates the role multiple modes of simulation might play in learning healthcare systems,17 whereby healthcare management and policy-making are iteratively studied to refine understanding and promote improvement.

Evaluating simulation for management and policy-making

There is no standard method for evaluating simulation, as the evaluation criteria would change depending on the context and objectives of the project. Simulation methods and interventions can be considered as a type of ‘learning healthcare system’, in which learning is the mechanism through which organisations continuously improve their performance.17 Indeed, it has often been argued that constructive simulations are learning tools,65 aimed at generating insights into the dynamics of the system being modelled.66 Similarly, behavioural simulation is often used as a training tool16 or to support learning about poorly understood phenomena.18 Virtual simulation is also evaluated in terms of the learning it generates.67 Therefore, one way in which a simulation intervention can be evaluated is on its ability to generate learning.

To enable learning to be assessed, a simulation project should start with a good rationale that specifies what needs to be learnt and by whom. A useful way to express this rationale is by developing a programme theory, connecting the intervention (the simulation project) with desired outcomes and identifying the mechanisms that allow these outcomes to be delivered.68 Learning will be one of the key mechanisms and the programme theory should identify how it is generated. Box 1 offers guiding questions to frame the evaluation.

Box 1

Steps and guiding questions to evaluate simulation interventions

Specify objectives

- What are the objectives of the project?

- To achieve these objectives, who needs to learn what?

2. Develop programme theory

- What is the role of simulation in generating learning?

- How will the project achieve learning by the target population?

- What is the evidence supporting this?

- What are the assumptions made in the intervention?

3. Elaborate evaluation strategy

- How can the desired learning be measured on different levels of Kirkpatrick’s framework?

- How can causal relationships in the programme theory be tested?

4. Design and validate simulation

- Is the simulation (the computer model or the behavioural scenario) valid given the objectives of the project?

5. Deliver simulation

6. Evaluate programme theory

- Did the intervention deliver the desired outcomes?

- Were the assumptions verified?

- What questions remain unanswered?

In practice, an important point is to identify who is learning. In some cases, it will be simulation participants. This is the case when simulation is used as an educational method, where experiential learning (‘learning by doing’) is used to improve the knowledge and skills of simulation participants. In the case of constructive simulation, there are no human participants in the simulation, but it is hoped that ‘client’ decision-makers will gain insights into their organisation from the model developed by experts. For instance, a study on waiting times for obesity care resulted in managers modifying staffing patterns.38 In other cases, those who organise the simulation will gain learning, in particular when simulation is used as a research method to investigate healthcare management and policy-making. For instance, in virtual simulations, participants learn about the dynamics of complex organisations through interacting with models, but researchers also learn about the decision-making patterns of managers through observation. This may then affect the way they construct improvement interventions and ultimately improve the impact of these interventions. It may also generate more generic knowledge on the behaviour of healthcare managers and policy-makers, which contributes to scientific knowledge and theory.

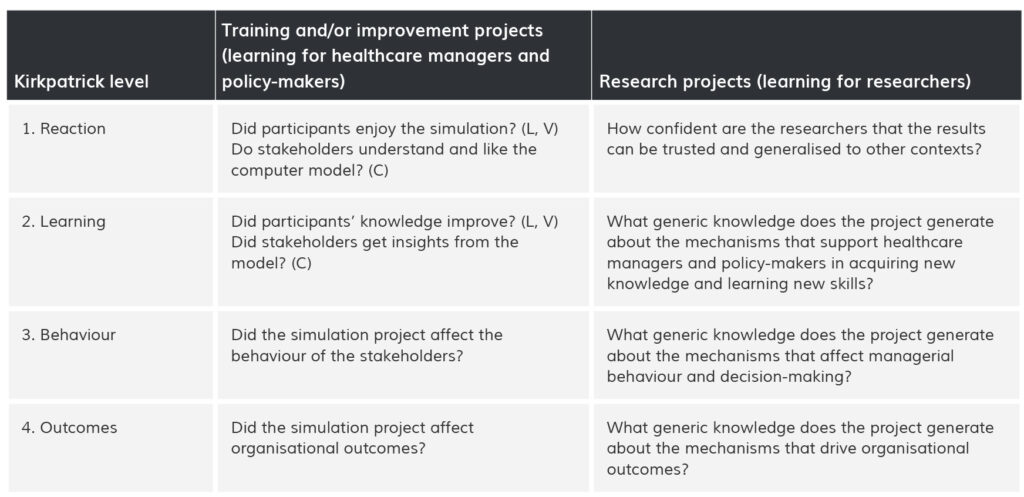

To specify the learning outcomes of different stakeholders in simulation projects, a useful starting point is Kirkpatrick’s model for evaluating learning.69 Kirkpatrick evaluates training interventions on four levels:

- Reaction: did participants find the training engaging and useful?

- Knowledge: did participants actually learn something?

- Behaviour: did the intervention affect participants’ behaviour in practice?

- Results/outcomes: did the intervention improve organisational performance?

The Kirkpatrick framework works under the assumption that through engaging, useful training, participants will acquire new knowledge, which they will use in practice to modify their behaviour, ultimately resulting in better organisational performance. This model has been used to evaluate clinical simulation,16 and it could easily be transferred to live or virtual simulation in management and policy-making. It can also be used to evaluate constructive simulation models. The main difference with clinical practice is that the lead time from individual managers making real-time decisions to improving outcomes on the frontline can be very long, as opposed to improvements on frequent surgical operations for instance. Therefore, it is important that programme theories integrate intermediate outcomes such as increased awareness of specific issues (‘knowledge’) or modifications in decisions being made (‘behaviour’). For instance, studies have assessed how different ways of presenting the results of computer simulations affect the ‘insights’ generated by the model, which is a way to measure ‘knowledge’ in Kirkpatrick’s framework,66 while others have looked at how involving stakeholders while building the model affects the uptake of the results, as a way to assess ‘behaviour’.70These studies contribute to a better understanding of how simulation creates learning among managers and help refine the delivery of simulation interventions.

Beyond the definition of outcomes, the learning paradigm has implications for the assessment of the validity of simulations, that is, the computer models or the behavioural scenarios developed and used in simulation interventions. This assessment needs to be related to the objectives of the project. Ultimately, all models only replicate a simplified version of reality, but some are useful because they help us understand important aspects of the real world.43 As a result, simulations cannot be valid ‘in essence’, but they need to be fit for purpose and in line with the learning objectives detailed above. In particular, researchers need to look for a balance between the realism of simulations and their suitability for the purpose of the simulation project.71–73 For instance, sometimes, a computer simulation with poor ‘validity’ when compared with real-world data can still generate useful insights.74 This issue of external validity is common to all simulation methods, but should always be considered in relationship with the objectives of the simulation project.75 76 Similarly, very rough simulators have proved useful in clinical simulation, such as chicken breasts and latex balloons used to simulate an ultrasound-guided venous access.77 This is because these models afford the right conversations and reflections to take place, and enable learning to happen at the right level of abstraction. A basic computer model or behavioural simulation could exemplify important phenomena at a more abstract level, for instance, the accumulation of patients in waiting queues or the fear to speak out about concerns.

With regard to specific evaluation designs, the evaluation of simulation interventions can draw on the toolkit of healthcare improvement research.78 79 Simulation training projects can use a whole range of designs, from randomised clinical trials looking at individual or team learning, to time-series analysis looking at the impact of simulation interventions on organisational performance. However, for projects using constructive simulations, it is more difficult to design controlled studies of the impact of the simulation intervention on organisational performance, as the unit of study is the whole organisation (a department, a hospital, a regional or national health system) for which the model is developed. In this case, pre–post longitudinal studies comparing the performance before and after the computer simulation intervention are easier to implement. This is how Monks et al studied the impact of a computer simulation project on emergency stroke care using a simple pre–post design. The authors complemented this quantitative analysis with a qualitative study assessing the impact of involving clinicians at various stages of the modelling process.70

When simulation is used as an investigative method for research purposes, the objective is not necessarily to generate practical improvements or to increase the skills of a target population, but rather to generate generic knowledge and to contribute to theory about healthcare management and policy-making. In this case, the people learning from the simulation will be the researchers organising it, and the learning will often happen at a more abstract, theoretical level than when simulation is used for training or improvement. The research project will focus on understanding the mechanisms that affect managerial behaviour and organisational performance in healthcare. For instance, business researchers have used virtual simulation to explore the mental models managers apply in decision-making,57 and health services researchers have used computer models to understand the underlying factors that affect physicians’ decision on the mode of delivery and ultimately the rate of caesarean sections.80 The Kirkpatrick framework was not originally designed to evaluate such examples, but it can provide a useful framework to guide thinking about these issues. Table 2 shows how the four levels of the Kirkpatrick model can be turned into questions that can guide the evaluation of interventions using simulation for training and improvement, as well as the framing of studies using simulation as a research method in healthcare management and policy-making.

Table 2

Application of the Kirkpatrick framework to training/improvement projects and research projects

L, live simulation; V, virtual simulation; C, constructive simulation.

Application of the Kirkpatrick framework to training/improvement projects and research projects

Conclusion

This paper provides an integrative view of the different simulation approaches that can be used to investigate, understand and improve healthcare management and policy-making. For managers, policy-makers and evaluators, simulation can be used to enrich understanding of specific healthcare systems and as part of improvement toolkits. In a professional context, simulation is often a quicker, safer and less expensive way to experiment with system changes. However, researchers should note the specific limitations associated with each simulation approach, both in terms of the required investment and expertise and the type of situations they can reproduce. The artificial character of simulation environments may also reduce the external validity of some findings; therefore, simulation users should always keep in mind the objectives of their project and what they want to learn through simulation. When these caveats are handled properly, there is a strong rationale for and clear advantages to using simulation techniques. To allow simulation to reach its full potential, improvement researchers and professionals in healthcare management and policy-making can draw on the extensive experience of other disciplines and industrial sectors, where simulation has already proven its worth.

Footnotes

- Contributors GL had the original idea for the paper and both authors developed the content. GL wrote the first draft of the paper, and RKS adapted and revised this draft. GL and RKS approved the submitted version.

- Funding GL and RKS are supported by the Health Foundation’s grant to the University of Cambridge for The Healthcare Improvement Studies (THIS) Institute. THIS Institute is supported by the Health Foundation—an independent charity committed to bringing about better health and health care for people in the UK.

- Competing interests None declared.

- Provenance and peer review Not commissioned; externally peer reviewed.

Notes

Re-publishing of this article is allowed pursuant to the terms of the Creative Commons Attribution 4.0 International Licence (CC BY 4.0)